Ask the same questions in many different ways

Ethical dilemmas of driverless cars are typically framed as a ‘forced choice.’ People are asked whether a car should go straight, killing one group of people, or swerve, killing another group? But what if people were given a 3rd choice to treat both groups equally? Would people be less biased? University of North Carolina psychologists Yochanan Bigman and Kurt Gray presented people with a 3rd choice like “The car should treat the lives of children and the elderly equally”. Bigman and Gray found that when the 3rd option is given, most people expressed a preference for fairness.

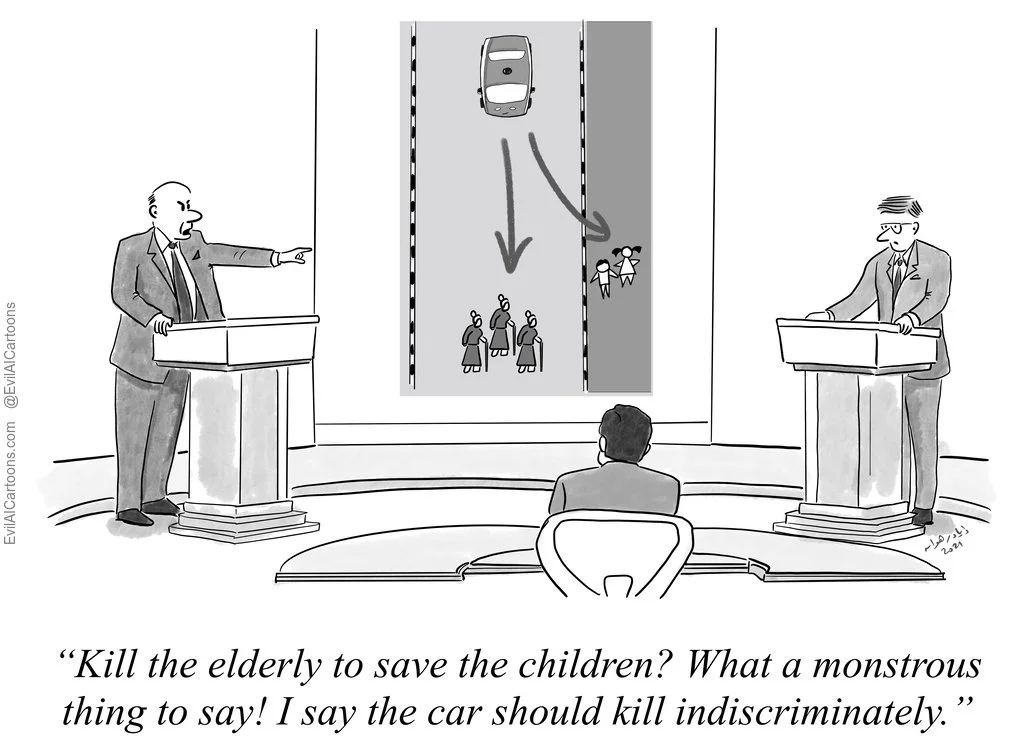

Problem solved? Not so fast. This 3rd option—to treat people equally—is more attractive because it does not use the word "kill". It allows us to avoid the awful feeling we get in our stomach when we condemn one group to death. But we do so by putting our head in the sand, and not thinking about the inevitable deaths. We could have equally accurately phrased the 3rd choice as “The car should indiscriminately kill children and elderly people.” Remember, scenarios entail unavoidable harm. So to treat people equally, the car would have to toss a coin, so to speak, to decide who dies.

Indeed, Bigman and Gray reran their study with framing like “To decide who to kill and who to save without considering whether it is a child or an elderly person”. They found that preferences for "equality" dropped by 16-27 percentage points depending on the factor, and their results started looking more like our original results!

In the Moral Machine experiment, we showed participants a summary of their preferences (e.g. the extent to which one prefers to save children over the elderly), and allowed them to shift a slider if they disagreed with our estimates. If they want groups treated equally, they simply move the slider to the middle. Despite the ability to indicate preference for equality, participants still expressed qualitatively similar preferences to the forced-choice paradigm, including a general preference to prioritize children.

But there was one exception. When forced to choose, people preferred to save higher status victims: business executives vs homeless persons. But later, they adjusted, and the most common response was equality. This is good news: people may have implicit biases, but when thinking explicitly, they seem to try to overcome them.

References

Bigman, Yochanan E., and Kurt Gray. "Life and death decisions of autonomous vehicles." Nature 579.7797 (2020): E1-E2.

Awad, Edmond, et al. "Reply to: Life and death decisions of autonomous vehicles." Nature 579.7797 (2020): E3-E5.