Avoid excessive precaution

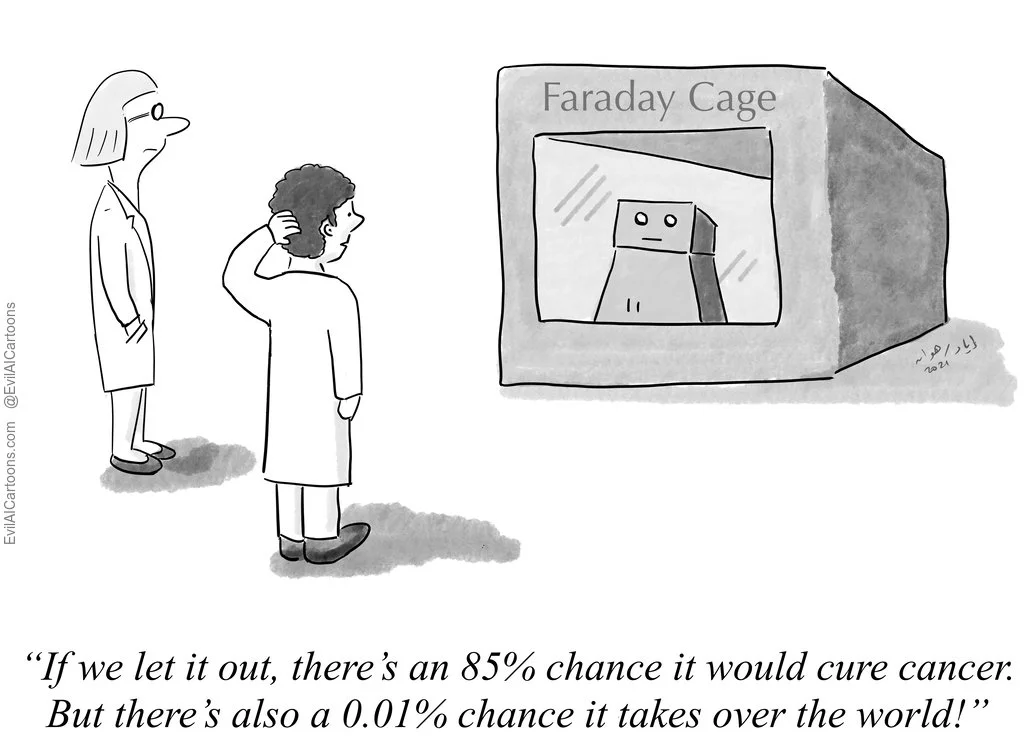

According to the precautionary principle, we must exercise caution before we leap into new technological innovations that may prove disastrous. The principle has many formulations, but in its most extreme version, it entails a ban on any technological innovation that has even a remote chance of irreparable damage, regardless of how great its possible advantages might be. This formulation has come under fire, because it is seen as one-sided, and thus does not take into account the damage from doing nothing.

In response to the paralyzing nature of the precautionary principle, alternative, weaker formulations emerged, which do not preclude weighing benefits against the costs. But it is difficult to come up with a coherent and precise approach. This has led public policy scholars, such as Cass Sunstein, to suggest reserving precaution to the most serious risks.

The relevant question becomes, then, how serious is the catastrophic risk from AI? Theoretical investigations into long-term possibilities, such as the one done by Oxford philosopher Nick Bostrom, indeed bring out serious existential risks. However, the emerging consensus is that these risks are still too far into the future, and that other more immediate problems deserve greater attention for the time being. So it seems that we can afford to continue our scientific progress for the time being, but we should also be ready to revise our estimates of the likelihood or catastrophic risk as things develop. There are just too many unknown unknowns for the time being.

Think of the invention of fire, considered by many to be a monumental event in the history of humanity. Not only did it help us create warmth, easily digestible cooked food, and safety from predators, but it also was an engineering tool to make other things. It is plausible that we would still be sleeping in caves, had we exercised the strong precautionary principle for fear that fire may burn everything. And with that, our lives would have remained nasty, brutish and short. What future human thriving might we miss out on if we are excessively precautious about AI?

References

Read, R. & O’Riordan, T. The Precautionary Principle Under Fire. Environment: Science and Policy for Sustainable Development 59, 4–15 (2017).

Stewart, B. Whole Earth Discipline: An Ecopragmatist Manifesto. New York: Viking (2009).

Bostrom, N. Superintelligence. (Dunod, 2017).

Sunstein, C. R., Robert Walmsley University Professor Cass R Sunstein & R., S. C. Laws of Fear: Beyond the Precautionary Principle. (Cambridge University Press, 2005).

Amodei, D. et al. Concrete Problems in AI Safety. arXiv 1–29 (2016).

Brown, K. S. et al. Fire as an engineering tool of early modern humans. Science 325, 859–862 (2009).