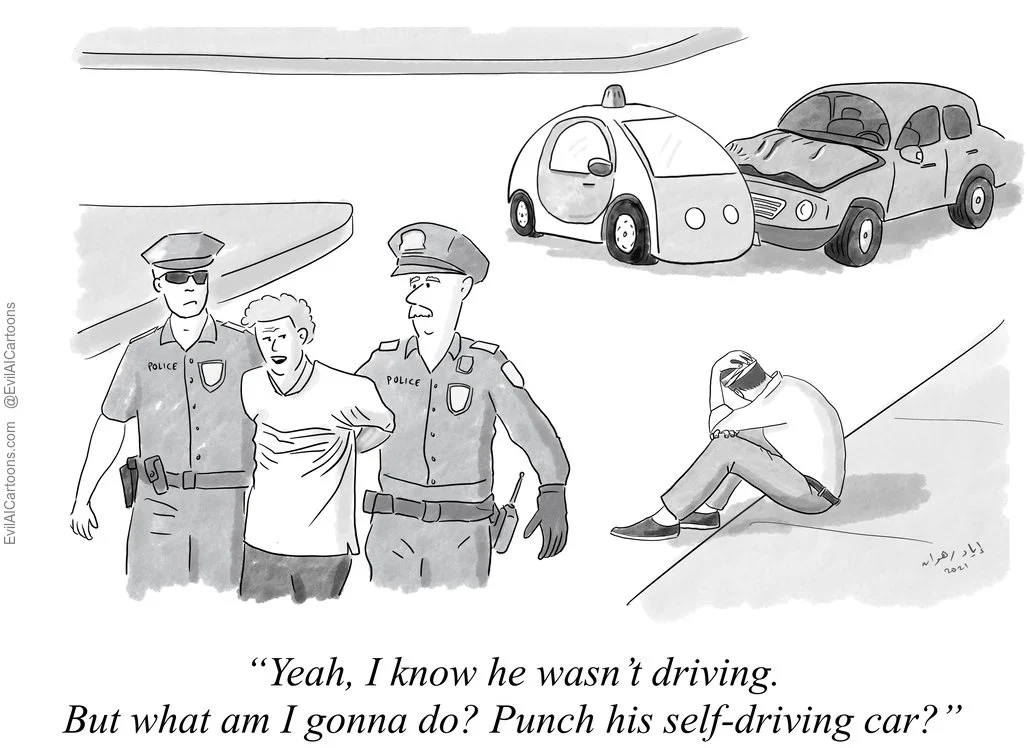

Do not use humans as scapegoats

At 9:58pm on March 18, 2018, 49 year old Elaine Herzberg was crossing Mill Avenue, a historic street in Tempe, Arizona, pushing her bicycle beside her. A prototype Uber self-driving car, a modified Volvo XC90, struck her fatally. The car's human safety backup driver, Rafaela Vasquez, did not intervene in time to prevent the collision. This became the first recorded case of a pedestrian killed by a self-driving car.

In the aftermath of the fatal crash, many were quick to blame Vasquez, the human safety driver. This is despite initial reports suggesting serious failure of the technology. It did not help that subsequent evidence emerged that Vasquez may have been watching a streaming TV show, rather than concentrating on the road. Eventually, Vasquez faced criminal charges. But while Uber did not face criminal charges, a US National Transportation Safety Board (NTSB) report found that the vehicle’s systems also failed to identify Herzberg. Herzberg’s family reached an undisclosed legal settlement with Uber, burying further exploration of their potential civil liability.

In a project led by my former postdoctoral students Edmond Awad and Sydney Levine, we presented people with accident scenarios in which the AI and the human shared control of a vehicle. In one scenario, the human drove, and the AI was able to intervene, say if the human did not break in time. This driver assistance system is sometimes called a ‘guardian angel.’ Another scenario involved an AI driving, with a human ready to take over in case the AI errs. This is akin to Tesla’s autopilot, and the Uber car mentioned above.

In our scenarios, both the human and the AI were at fault: the primary driver made a mistake, and the secondary driver failed to intervene. But no matter whether the human or the AI was the primary or secondary driver, people blamed the AI less.

This finding suggests that the public and experts may under-react to malfunctioning AI components of automated cars, or AI systems more generally, because of a tendency to find the nearest human scapegoat--e.g. the driver rather than the AI programmer. In the words of anthropologist Madeleine Clare Elish, the human acts as a ‘moral crumple zone’ for the AI.

References

Wong, S. L. A. J. Self-driving Uber kills Arizona woman in first fatal crash involving pedestrian. Guardian (2018).

Said, C. Video shows Uber robot car in fatal accident did not try to avoid woman. SFGATE (2018).

Cellan-Jones, R. Uber’s self-driving operator charged over fatal crash. BBC News https://www.bbc.com/news/technology-54175359 (2020).

Uber in fatal crash had safety flaws say US investigators. BBC News https://www.bbc.com/news/business-50312340 (2019).

Neuman, S. Uber Reaches Settlement With Family Of Arizona Woman Killed By Driverless Car. NPR https://www.npr.org/sections/thetwo-way/2018/03/29/597850303/uber-reaches-settlement-with-family-of-arizona-woman-killed-by-driverless-car?t=1623517016553 (2018).

Awad, E. et al. Drivers are blamed more than their automated cars when both make mistakes. Nat Hum Behav 4, 134–143 (2020).

Elish, M. C. Moral crumple zones: Cautionary tales in human-robot interaction. Engaging Science, Technology, and Society 5, 40–60 (2019).