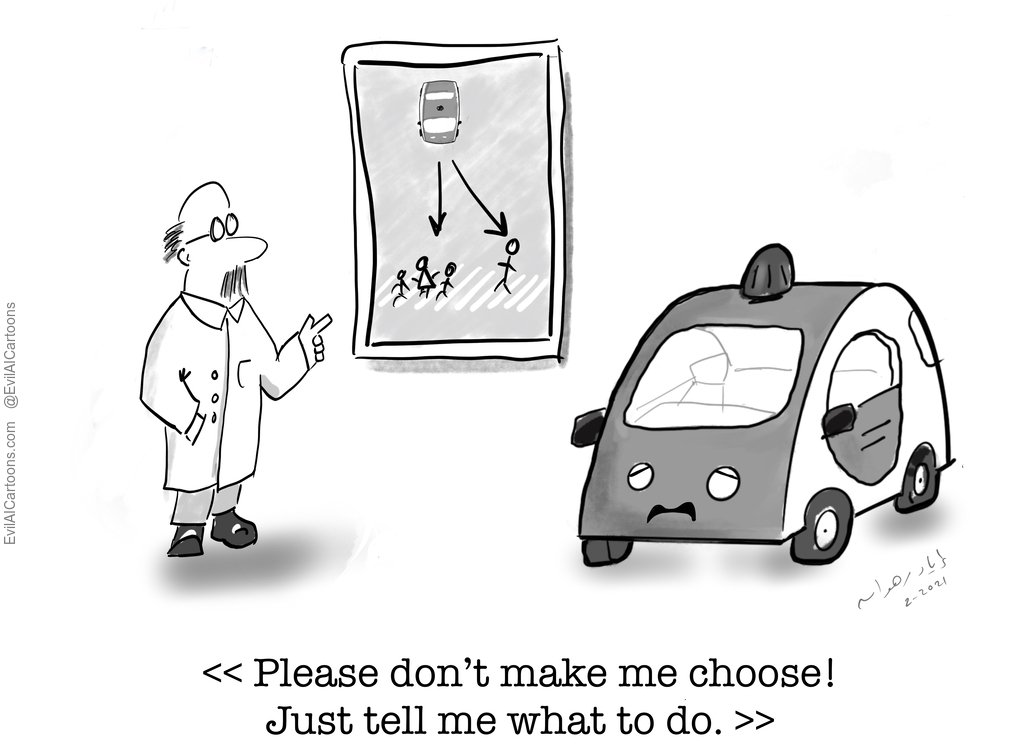

Only humans can solve ethical dilemmas

AI systems cannot figure out the solution to ethical dilemmas on their own. Doing so would imply that the machine has become a moral agent, capable of discerning right from wrong without explicit oversight.

While some AI researchers are exploring the possibility of machines calculating ethical behaviors in novel situations from a set of abstract principles, we are far from achieving this goal. In the meantime, it is humans who should bear the responsibility of thinking about, and ‘solving’ ethical dilemmas in a way that is at least defensible.

AIs will simply maximize the objectives we give them. It is up to us, humans to specify the objectives and constraints that guide machine behavior.