Allow people to challenge machine decisions

In Little Britain, a British sketch comedy series that aired between the years 2003 and 2007, there is a character named Carol Beer played by actor David Walliams. Carol is typically a customer service clerk or a bureaucrat who sits behind a computer, typing away for an inexplicably long time, before condescendingly telling the client: “Computer says no!” There is pretty much nothing the client can do at this point to understand or to contest the decision. They can ask to speak to the manager of course, to which Carol will once more say “Computer says no!”

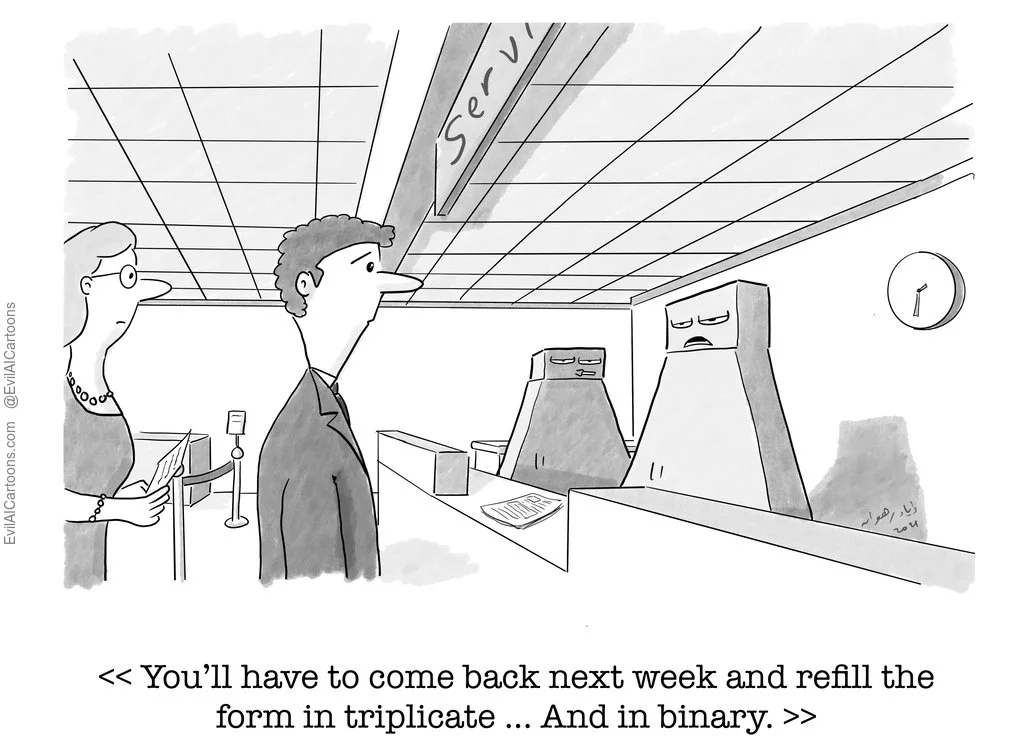

The catch phrase became a popular cultural symbol not only for deliberately unhelpful customer service agents and bureaucrats—an old phenomenon that preceded computers—but also for our inability to question decisions made by computers and AI systems.

The opacity, unaccountability, and uncontestability of decisions made by AI systems can often be a feature, not a bug, from the perspective of the designers. A low-tech version of this phenomenon are the so-called ‘dark patterns’—user interface designs that nudge you to purchase overpriced items, or make it nearly impossible for you to delete your account on a web site.

But even if we wanted to provide an explanation for decisions, this is not always a trivial matter. The AI systems of the 1980s were rule-based: they made decisions by combining explicit rules given to them by programmers, such as: “If the client makes less than $2,000 per month and is younger than 25, then do not approve the loan.” This style of programming makes it relatively easy to explain the decision: you simply show the rule that triggered the rejection.

But many modern AI systems use much more complex statistical techniques, with millions or billions of statistical variables whose meaning is not easy to extract, let alone explain to a lay person. Thus, these systems become blackboxes, above scrutiny. The field of Explainable AI (XAI) attempts to fix this problem by building AI systems whose outputs can be understood by humans. This is important, especially given the increased public awareness of the problem, and the emergence of legislations—such as the 2016 European Union General Data Protection Regulation (GDPR)—that promote the “right to explanation” for algorithmic decisions.

References

Hyde, D. Who to blame when ’computer says no. The Telegraph (2013).

Gray, C. M., Kou, Y., Battles, B., Hoggatt, J. & Toombs, A. L. The Dark (Patterns) Side of UX Design. in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems 1–14 (Association for Computing Machinery, 2018).

Pasquale, F. The black box society: The secret algorithms that control money and information. (Harvard University Press, 2015).

Gunning, D. et al. XAI-Explainable artificial intelligence. Sci Robot 4, (2019).