Tame human overconfidence

One of the biggest selling points of autonomous vehicles (AVs) is that they promise to eliminate 90% of all road accidents, because that is the proportion of accidents that are purportedly due to human error. It seems obvious, then, that we should allow AVs on the road as soon as they are safer than the average human driver. In fact, there is a moral imperative to encourage mass adoption of AVs once they achieve this milestone, because it will save lives. A 2017 RAND Corporation study simulated 500 future scenarios for the adoption of AVs. In almost all scenarios, if we wait for AVs to be 90% (rather than 10%) safer than the average human driver, we risk failing to save thousands, if not hundreds of thousands, of lives in the long term that could be lost while we perfect AV technology.

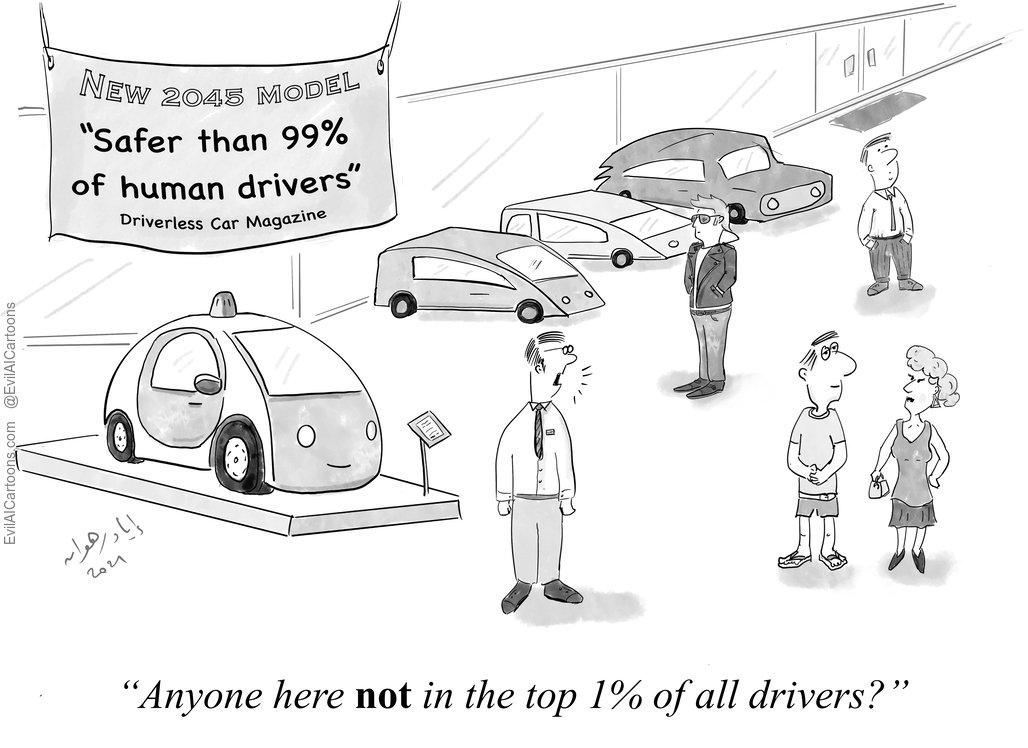

Unfortunately, the broad acceptance of just-better-than-average AVs may be undermined by psychological biases that fester in the minds of consumers. One such bias is the ‘better-than-average’ effect’—or illusory superiority—whereby people overestimate their own abilities. Even if AVs were safer than the average person, if most people believed—incorrectly—that they were very safe drivers, would they refuse to adopt AVs even if AVs are objectively better?

To explore this question, Azim Shariff, Jean-François Bonnefon and I ran a survey with a representative sample of Americans. On average, respondents thought that if everyone drove as they did, 66% of accidents would be eliminated. This pattern was true for both men and women, in every age group, and for all education levels. And the higher people rated their own driving safety, the safer they wanted AVs to be before they were willing to adopt them. Only 34% of people would adopt AVs if they were safer than half the population of drivers. And a whopping 30% of people need AVs to be safer than 90% of drivers before they adopt them.

Autonomous driving is, of course, just one example. In any domain of human expertise, from medical diagnosis to judicial decisions, overconfident humans may cause harm if they do not use superior AIs. So, while we should not blindly trust inferior machines, we should also avoid blindly trusting ourselves over superior ones. And to achieve the right balance, we need reliable benchmarks of performance for humans and machines alike.

References

Bertoncello, M. & Wee, D. Ten ways autonomous driving could redefine the automotive world. McKinsey & Company 6, (2015).

Kalra, N. & Groves, D. G. The Enemy of Good: Estimating the Cost of Waiting for Nearly Perfect Automated Vehicles. (Rand Corporation, 2017).

Shariff, A., Bonnefon, J.-F. & Rahwan, I. How safe is safe enough? Psychological mechanisms underlying extreme safety demands for self-driving cars. Transp. Res. Part C: Emerg. Technol. 126, 103069 (2021).

Liu, P., Yang, R. & Xu, Z. How safe is safe enough for self-driving vehicles? Risk Anal. 39, 315–325 (2019).

Liu, P., Wang, L. & Vincent, C. Self-driving vehicles against human drivers: Equal safety is far from enough. J. Exp. Psychol. Appl. 26, 692–704 (2020).